K3S 私有化部署方案实践

准备工作

硬件

- 主 K3S Master 节点:3台, master101, master102, master103

- 业务 K3S Master 节点:3台,master151, master152, master153

- Worker 节点:N台,随意, worker01, worker02, ...

软件

基础配置

- 操作系统:Ubuntu Server 22.04

最小化安装系统,包含:openssh-server, iputils-ping, net-tools, vim

PRETTY_NAME="Ubuntu 22.04.5 LTS"

NAME="Ubuntu"

VERSION_ID="22.04"

VERSION="22.04.5 LTS (Jammy Jellyfish)"- 管理集群

| 主机名 | IP | 其他 |

|---|---|---|

| database | 192.168.198.100 | 数据库+NFS存储,如有需要做 HA |

| master101 | 192.168.198.101 | 管理 K3S master |

| master102 | 192.168.198.102 | 管理 K3S master |

| master103 | 192.168.198.103 | 管理 K3S master |

- 业务集群

| 主机名 | IP | 其他 |

|---|---|---|

| master151 | 192.168.123.151 | 业务 K3S master + worker |

| master152 | 192.168.123.152 | 业务 K3S master + worker |

| master153 | 192.168.123.153 | 业务 K3S master + worker |

| worker161 | 192.168.123.161 | 业务 worker |

| worker162 | 192.168.123.162 | 业务 worker |

数据库配置

Docker

-

准备 docker 环境

curl -fsSL https://get.docker.com | sh - -

验证 docker

docker images REPOSITORY TAG IMAGE ID CREATED SIZE -

设置一个 Registry 镜像

cat /etc/docker/daemon.json { "insecure-registries" : [ "0.0.0.0/0" ], "registry-mirrors": [ "https://xxx.mirror.swr.myhuaweicloud.com" ] } -

重启 docker

systemctl restart docker

数据库

PostgreSQL

-

创建 compose.yml

services: postgres: image: postgres:alpine container_name: postgres restart: unless-stopped environment: POSTGRES_USER: k3s POSTGRES_PASSWORD: talent POSTGRES_DB: k3s ports: - "5432:5432" volumes: - ./data:/var/lib/postgresql/data - ./init-db.sh:/docker-entrypoint-initdb.d/init-db.sh -

创建脚本 init-db.sh

#!/bin/bash set -e psql -v ON_ERROR_STOP=1 --username "$POSTGRES_USER" --dbname=postgres <<-EOSQL CREATE DATABASE harbor; EOSQL -

启动 PostgreSQL 数据库容器

docker compose up -d -

验证数据库

docker exec -it postgres psql -U k3s -c '\l' List of databases Name | Owner | Encoding | Locale Provider | Collate | Ctype | Locale | ICU Rules | Access privileges -----------+-------+----------+-----------------+------------+------------+--------+-----------+------------------- harbor | k3s | UTF8 | libc | en_US.utf8 | en_US.utf8 | | | k3s | k3s | UTF8 | libc | en_US.utf8 | en_US.utf8 | | | ... (5 rows)

MySQL

因为 Rancher 只支持 MySQL 不支持 PostgreSQL

-

创建 compose.yml

services: mysql: image: mysql:latest container_name: mysql restart: unless-stopped environment: MYSQL_ROOT_PASSWORD: talent MYSQL_DATABASE: rancher MYSQL_USER: rancher MYSQL_PASSWORD: talent ports: - "3306:3306" volumes: - ./data:/var/lib/mysql - ./init:/docker-entrypoint-initdb.d -

创建脚本 init/permissions.sql

GRANT ALL PRIVILEGES ON *.* TO 'rancher'@'%' IDENTIFIED BY 'talent'; FLUSH PRIVILEGES; -

启动 MySQL 数据库容器

docker compose up -d -

验证数据库

docker exec -it mysql mysql -u rancher -p'talent' -e "SHOW DATABASES;" +--------------------+ | Database | +--------------------+ | rancher | +--------------------+

部署 K3S 管理集群

本章节内容在

管理 K3S 主机上执行

主机名

- 所有的 Master 配置相同的 /etc/hosts

127.0.0.1 localhost 127.0.1.1 master101 # CHAMGEME 192.168.198.100 database 192.168.198.101 master101 192.168.198.102 master102 192.168.198.103 master103

K3S 核心安装

自动安装(推荐)

-

可以连接互联网的情况下

默认安装最新版本,这里是:v1.30.5+k3s1,注意:k3s:talent是你自己的数据库用户名密码,使用一样的tokencurl -sfL https://get.k3s.io | sh -s - server \ --datastore-endpoint="postgres://k3s:talent@database:5432/k3s?sslmode=disable" --token K10c71232f051944de58ab058871ac7a85b42cbdee5c6df94deb6bb493c79b15a92::server:3d6c2d90cec2e63db66bde0e6f24013d -

验证安装

kubectl get nodes NAME STATUS ROLES AGE VERSION master101 Ready control-plane,master 43s v1.30.5+k3s1 -

其他 Master 安装方式一样。在任何一台 Master 上确认安装完成

kubectl get nodes NAME STATUS ROLES AGE VERSION master101 Ready control-plane,master 117s v1.30.5+k3s1 master102 Ready control-plane,master 118s v1.30.5+k3s1 master103 Ready control-plane,master 29s v1.30.5+k3s1

手动二进制文件安装(不推荐)

-

下载二进制文件

wget https://github.com/k3s-io/k3s/releases/download/vX.X.X/k3s -O /usr/local/bin/k3s chmod +x /usr/local/bin/k3s -

初始化

k3s server --cluster-init -

设置自启动脚本

所有 Master 机器上一样的配置cat <<EOF | sudo tee /etc/systemd/system/k3s.service [Unit] Description=Lightweight Kubernetes Documentation=https://k3s.io After=network.target [Service] ExecStart=/usr/local/bin/k3s server --datastore-endpoint="postgres://k3s:talent@database:5432/k3s?sslmode=disable" --token K10c71232f051944de58ab058871ac7a85b42cbdee5c6df94deb6bb493c79b15a92::server:3d6c2d90cec2e63db66bde0e6f24013d Restart=always RestartSec=5s [Install] WantedBy=multi-user.target EOF -

更新自启动设置

systemctl daemon-reload systemctl enable k3s systemctl start k3s -

查看集群状态

任何一台 Master 都可以/usr/local/bin/k3s kubectl get nodes结果

NAME STATUS ROLES AGE VERSION master101 Ready control-plane,master 10m v1.30.4+k3s1 master102 Ready control-plane,master 10m v1.30.4+k3s1 master103 Ready control-plane,master 81s v1.30.4+k3s1

Master / Worker 手动加入(如有必要)

-

获取集群 token

cat /var/lib/rancher/k3s/server/node-token -

Master 直接加入集群(不建议)

k3s server --server https://master101:6443 --token <token>或修改 /etc/systemd/system/k3s.service

-

Worker加入(可选)

替换<node-token>为你的tokencat <<EOF | sudo tee /etc/systemd/system/k3s-agent.service [Unit] Description=Lightweight Kubernetes Documentation=https://k3s.io After=network.target [Service] ExecStart=/usr/local/bin/k3s agent --server https://master101:6443 --token <node-token> Restart=always RestartSec=5s [Install] WantedBy=multi-user.target EOF -

更新自启动设置

systemctl daemon-reload systemctl enable k3s-agent systemctl start k3s-agent

不管怎么操作,基础三个主机的 K3S 集群要跑起来,保证基础工作完成。

安装 Rancher 集群管理工具和 Harbor Registry 仓库

准备工作

安装 Kubernetes 包管理工具 Helm

-

选择一个 Master 或全部 Master,安装 Helm

curl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash - -

设置环境变量

加入到/etc/profile自启动echo "export KUBECONFIG=/etc/rancher/k3s/k3s.yaml" >> /etc/profile && source /etc/profile

安装证书管理器 cert-manager

-

helm 脚本安装

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.15.3/cert-manager.crds.yaml helm repo add jetstack https://charts.jetstack.io helm repo update helm install cert-manager jetstack/cert-manager --namespace cert-manager \ --create-namespace --version v1.15.3 -

验证安装成功

kubectl get pods --namespace cert-manager NAME READY STATUS RESTARTS AGE cert-manager-9647b459d-9jwpj 1/1 Running 0 2m47s cert-manager-cainjector-5d8798687c-66r95 1/1 Running 0 2m47s cert-manager-webhook-c77744d75-k22h5 1/1 Running 0 2m47s -

设置自签名 Issuer

创建 issuer-cert.yaml, 这里创建两个:rancher.yiqisoft.comhub.yiqisoft.com, 后面访问集群业务就是通过域名来通信apiVersion: cert-manager.io/v1 kind: ClusterIssuer metadata: name: selfsigned-issuer spec: selfSigned: {} --- apiVersion: cert-manager.io/v1 kind: Certificate metadata: name: rancher-cert namespace: cattle-system spec: secretName: rancher-cert-secret issuerRef: name: selfsigned-issuer kind: ClusterIssuer commonName: rancher.yiqisoft.com duration: 315360000s renewBefore: 240h dnsNames: - rancher.yiqisoft.com --- apiVersion: cert-manager.io/v1 kind: Certificate metadata: name: hub-cert namespace: harbor spec: secretName: hub-cert-secret issuerRef: name: selfsigned-issuer kind: ClusterIssuer commonName: hub.yiqisoft.com duration: 315360000s renewBefore: 240h dnsNames: - hub.yiqisoft.com -

安装自定义 Certificate

先创建两个 namespace,再安装脚本kubectl create namespace harbor kubectl create namespace cattle-system kubectl apply -f issuer-cert.yaml结果输出

clusterissuer.cert-manager.io/selfsigned-issuer created certificate.cert-manager.io/rancher-cert created certificate.cert-manager.io/hub-cert created

安装 Rancher

-

增加 Rancher Helm 仓库

helm repo add rancher-latest https://releases.rancher.com/server-charts/latest helm repo update -

安装 Rancher

这个过程比较漫长,需要从互联网下载helm install rancher rancher-latest/rancher \ --namespace cattle-system \ --set hostname=rancher.yiqisoft.com \ --set replicas=3 \ --set extraEnv[0].name=CATTLE_DATABASE_ENDPOINT \ --set extraEnv[0].value=database:3306 \ --set extraEnv[1].name=CATTLE_DATABASE_USER \ --set extraEnv[1].value=rancher \ --set extraEnv[2].name=CATTLE_DATABASE_PASSWORD \ --set extraEnv[2].value=talent \ --set extraEnv[3].name=CATTLE_DATABASE_NAME \ --set extraEnv[3].value=rancher -

验证安装成功

kubectl get pods --namespace cattle-system结果

NAME READY STATUS RESTARTS AGE rancher-567cc5c6ff-bzx42 1/1 Running 0 65m rancher-567cc5c6ff-mgvns 1/1 Running 0 86m rancher-567cc5c6ff-rbgjn 1/1 Running 0 86m rancher-webhook-77b49b9ff9-mp2dm 1/1 Running 0 142m

安装 Harbor

Harbor 作为内部 docker hub 使用

使用 Ceph(推荐)

准备一个 Ceph 集群用于存储(这里省略)。

使用 NFS

简单实用,但是效率不高,安全性一般。

- 在一个文件服务器上部署 NFS,这里用 database 主机

apt update apt install nfs-kernel-server -y mkdir -p /opt/nfs_share chown nobody:nogroup /opt/nfs_share chmod 777 /opt/nfs_share echo "/opt/nfs_share *(rw,sync,no_subtree_check)" >> /etc/exports exportfs -ra systemctl restart nfs-kernel-server

部署 pv 和 pvc

-

编辑 pv-pvc.yaml

apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv spec: capacity: storage: 30Gi accessModes: - ReadWriteMany nfs: path: /opt/nfs_share server: database --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfs-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 10Gi volumeName: nfs-pv storageClassName: "" -

应用到 namespace harbor

kubectl apply -f pv-pvc.yaml -n harbor结果

persistentvolume/nfs-pv created persistentvolumeclaim/nfs-pvc created -

查看结果,nfs-pvc 必须是

Boundkubectl get pvc -n harbor结果

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE nfs-pvc Bound nfs-pv 30Gi RWX <unset> 92s -

查看 nfs-pv 必须是

Boundkubectl get pv -n harbor结果

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE nfs-pv 30Gi RWX Retain Bound harbor/nfs-pvc <unset> 97s

部署 Harbor Helm

-

安装 NFS 依赖

所有的 Master 上需要安装 nfs-common,并验证(可选),可以访问/tmp/nfs目录apt install nfs-common -y mkdir /tmp/nfs mount -t nfs database:/opt/nfs_share /tmp/nfs ll /tmp/nfs -

增加 Harbor Helm 仓库

helm repo add harbor https://helm.goharbor.io helm repo update -

导出默认 values,修改

helm show values harbor/harbor > harbor-values.yml # 内容修改如下 expose: ingress: hosts: core: hub.yiqisoft.cn externalURL: https://hub.yiqisoft.cn persistentVolumeClaim: registry:/exter existingClaim: "nfs-pvc" storageClass: "-" database: type: external external: host: "192.168.198.100" port: "5432" username: "k3s" password: "talent" coreDatabase: "harbor" harborAdminPassword: "Harbor12345" -

安装 Harbor,需要通过互联网下载所有的镜像,时间根据网络带宽决定

helm install harbor harbor/harbor -f harbor-values.yml -n harbor -

确认 Harbor 是否安装成功

kubectl get pods -n harbor都显示 READY 即可

NAME READY STATUS RESTARTS AGE harbor-core-666cf84bc6-vq8mp 1/1 Running 0 85s harbor-jobservice-c68bc669-6twbp 1/1 Running 0 85s harbor-portal-d6c99f896-cq9fx 1/1 Running 0 85s harbor-redis-0 1/1 Running 0 84s harbor-registry-5984c768c8-4xnrg 2/2 Running 0 84s harbor-trivy-0 1/1 Running 0 84s -

验证 Harbor 工作状态

curl -k https://hub.yiqisoft.com/v2/ -u admin:Harbor12345提示

{}证明成功{}

以下在 database 主机上运行

-

登录 Harbor

docker login hub.yiqisoft.com -u admin -p Harbor12345登录成功

Login Succeeded -

上传已经存在的 PostgreSQL image 测试

docker tag postgres:alpine hub.yiqisoft.com/library/postgres:alpine docker push hub.yiqisoft.com/library/postgres:alpine结果显示

The push refers to repository [hub.yiqisoft.com/library/postgres] 284f9fec9bcb: Pushed 07188499987f: Pushed ... 63ca1fbb43ae: Pushed alpine: digest: sha256:f0407919d966a86e9***c81641801e43c29b size: 2402

批量上传所需 image 到 Harbor

在准备好 docker image 的机器上运行

-

导出需要的 image

docker images --format "{{.Repository}}:{{.Tag}}" |grep yiqisoft.com > images.txt -

新建 /etc/docker/daemon.json,跳过安全证书

{ "insecure-registries" : [ "0.0.0.0/0" ] } -

重启 docker

systemctl restart docker -

新建一个脚本 push-images.sh

#!/bin/bash # Check if the correct number of arguments is provided if [ $# -ne 1 ]; then echo "Usage: $0 <path_to_images_file>" exit 1 fi # Assign the first argument to the file variable file=$1 # Check if the file exists if [ ! -f "$file" ]; then echo "File not found: $file" exit 1 fi while read image; do echo "Pushing image: $image" docker push $image if [ $? -eq 0 ]; then echo "Successfully pushed $image" else echo "Failed to push $image" fi done < "$file" -

登录 Harbor 并上传: push image

docker login hub.yiqisoft.com -u admin -p Harbor12345 bash push-images.sh images.txt -

查看 Harbor Registry 仓库结果

curl -k https://hub.yiqisoft.com/v2/_catalog -u admin:Harbor12345 |json_pp结果显示

{ "repositories" : [ "edgexfoundry/app-service-gateway", "edgexfoundry/consul", "edgexfoundry/core-command", "edgexfoundry/core-common-config-bootstrapper", "edgexfoundry/core-data", "edgexfoundry/core-metadata", "edgexfoundry/device-modbus", "edgexfoundry/device-onvif-camera", "edgexfoundry/device-openvino-face-recognition", "edgexfoundry/device-openvino-object-detection", "edgexfoundry/edgex-ui", "edgexfoundry/redis", "edgexfoundry/support-notifications", "edgexfoundry/support-scheduler", "library/hello-world", "library/postgres", "rancher/system-agent", "yiqisoft/media_server", "yiqisoft/model_server", "yiqisoft/nginx", "yiqisoft/nvr" ] }

部署 K3S 业务集群

以下操作注意:全部在

新建的业务集群中操作

所有的 Master 和 Worker 都加入业务集群

Rancher 中创建一个新的集群

- Rancher UI 操作(

省略), Cluster Name:yiqisoft, Kubernetes Version:1.30.4+k3s1

Master 加入集群(初始化业务集群)

-

通过新集群的

Registration加入,可以自定义一个 namespace。需要几个 Master 根据自己业务需要来定。 -

导入 hub CA

echo | openssl s_client -showcerts -connect hub.yiqisoft.com:443 2>/dev/null | \ openssl x509 -outform PEM > /etc/ssl/certs/hub.yiqisoft.com.crt -

分别在 master151-master153 主机上执行,

主机名不能重复curl --insecure -fL https://rancher.yiqisoft.com/system-agent-install.sh | \ sudo sh -s - --server https://rancher.yiqisoft.com --label 'cattle.io/os=linux' \ --token xft4fsrjgkn4rzqxrgrb4mztkcxtz4nhpwm58t84q54lvpsscdgd72 \ --ca-checksum 30d78ad0ece6355c40a4b3764ba02dfc96388a9c20f6b4e2bffb131cf7402c1f \ --etcd --controlplane --worker -

查看业务集群 node

kubectl get nodes确认 Master 都在线

NAME STATUS ROLES AGE VERSION master151 Ready control-plane,etcd,master,worker 32m v1.30.4+k3s1 master152 Ready control-plane,etcd,master,worker 3h39m v1.30.4+k3s1 master153 Ready control-plane,etcd,master,worker 23m v1.30.4+k3s1

Worker 加入业务集群

-

首先通过 curl 直接输出 hub 证书到主机证书目录

containerd 拉取 image 时需要信任此证书 CAecho | openssl s_client -showcerts -connect hub.yiqisoft.com:443 2>/dev/null | \ openssl x509 -outform PEM > /etc/ssl/certs/hub.yiqisoft.com.crt -

需要等 Master 加入成功后(

至少一个成功注册),Worker 主机再次通过Registration加入,只需要选择Worker即可,需要定义一个namespacelabel, 比如: namespace=worker01,以后可单独部署到独立应用到 namespace 作为隔离使用。 -

在所有业务 Worker 上执行,同样注意

主机名不能重复curl --insecure -fL https://rancher.yiqisoft.com/system-agent-install.sh | \ sudo sh -s - \ --server https://rancher.yiqisoft.com \ --label 'cattle.io/os=linux' \ --token xft4fsrjgkn4rzqxrgrb4mztkcxtz4nhpwm58t84q54lvpsscdgd72 \ --ca-checksum 30d78ad0ece6355c40a4b3764ba02dfc96388a9c20f6b4e2bffb131cf7402c1f \ --worker \ --label namespace=worker161 -

验证 Worker 节点

kubectl get nodes -A结果正常

NAME STATUS ROLES AGE VERSION master151 Ready control-plane,etcd,master,worker 74m v1.30.4+k3s1 master152 Ready control-plane,etcd,master,worker 4h21m v1.30.4+k3s1 master153 Ready control-plane,etcd,master,worker 65m v1.30.4+k3s1 worker161 Ready worker 25m v1.30.4+k3s1

(部署/更新) Harbor hub CA 证书到所有的 Master 和 Worker

(手动操作)导出 Harbor hub HTTPS 证书

- 增加自签名证书到 ca-certificates

所有的 Master 和 Worker 上执行(不太可行)echo | openssl s_client -showcerts -connect hub.yiqisoft.com:443 2>/dev/null | \ openssl x509 -outform PEM > /etc/ssl/certs/hub.yiqisoft.com.crt

(自动部署)Harbor Hub CA 证书

新建一个 Secret

-

在业务集群中,把 Harbor 证书导入集群 kube-system

kubectl create secret generic hub-ca --from-file=ca.crt=/hub.yiqisoft.com.crt -n kube-system -

查看

kubectl get secret -A |grep hub-ca结果

NAMESPACE NAME TYPE DATA AGE kube-system hub-ca kubernetes.io/tls 2 101m

新建一个 DaemonSet

-

新建一个部署文件: ca-distributor.yaml,用于分发 hub 的证书,

非常重要,否则无法从私有 Registry 拉取镜像。apiVersion: apps/v1 kind: DaemonSet metadata: name: ca-distributor namespace: kube-system spec: selector: matchLabels: name: ca-distributor template: metadata: labels: name: ca-distributor spec: containers: - name: ca-distributor image: busybox:latest command: - /bin/sh - -c - "cp /etc/ca-certificates/tls.crt /etc/ssl/certs/hub.yiqisoft.cn.crt && echo 'Certificate Updated' && sleep 3600" volumeMounts: - name: ca-cert mountPath: /etc/ca-certificates - name: ssl-certs mountPath: /etc/ssl/certs volumes: - name: ca-cert secret: secretName: hub-ca - name: ssl-certs hostPath: path: /etc/ssl/certs type: Directory restartPolicy: Always -

部署 yaml

kubectl apply -f ca-distributor.yaml -

验证结果,确保所有的节点都执行成功。

kubectl get pods -A |grep ca-distributor kube-system ca-distributor-ckhgh 1/1 Running 0 100s kube-system ca-distributor-hqzwt 1/1 Running 0 101s kube-system ca-distributor-lj72n. 1/1 Running 0 101s kube-system ca-distributor-mpp7m 1/1 Running 0 101s -

在 Worker 上拉取镜像 image

k3s ctr i pull hub.yiqisoft.com/library/hello-world:latest验证结果

hub.yiqisoft.cn/library/hello-world:latest: resolved |++++++++++++++++++++++++++++++++++++++| manifest-sha256:d37ada95d47ad12224c205a938129df7a3e52345828b4fa27b03a98825d1e2e7: done |++++++++++++++++++++++++++++++++++++++| config-sha256:d2c94e258dcb3c5ac2798d32e1249e42ef01cba4841c2234249495f87264ac5a: done |++++++++++++++++++++++++++++++++++++++| layer-sha256:c1ec31eb59444d78df06a974d155e597c894ab4cda84f08294145e845394988e: done |++++++++++++++++++++++++++++++++++++++| elapsed: 0.6 s total: 524.0 (871.0 B/s) unpacking linux/amd64 sha256:d37ada95d47ad12224c205a938129df7a3e52345828b4fa27b03a98825d1e2e7... done: 54.873311ms

基于 K3S 部署业务应用(边缘计算框架)

以下所有部署都是通过 Helm Chart 工具进行,需要自行编写。

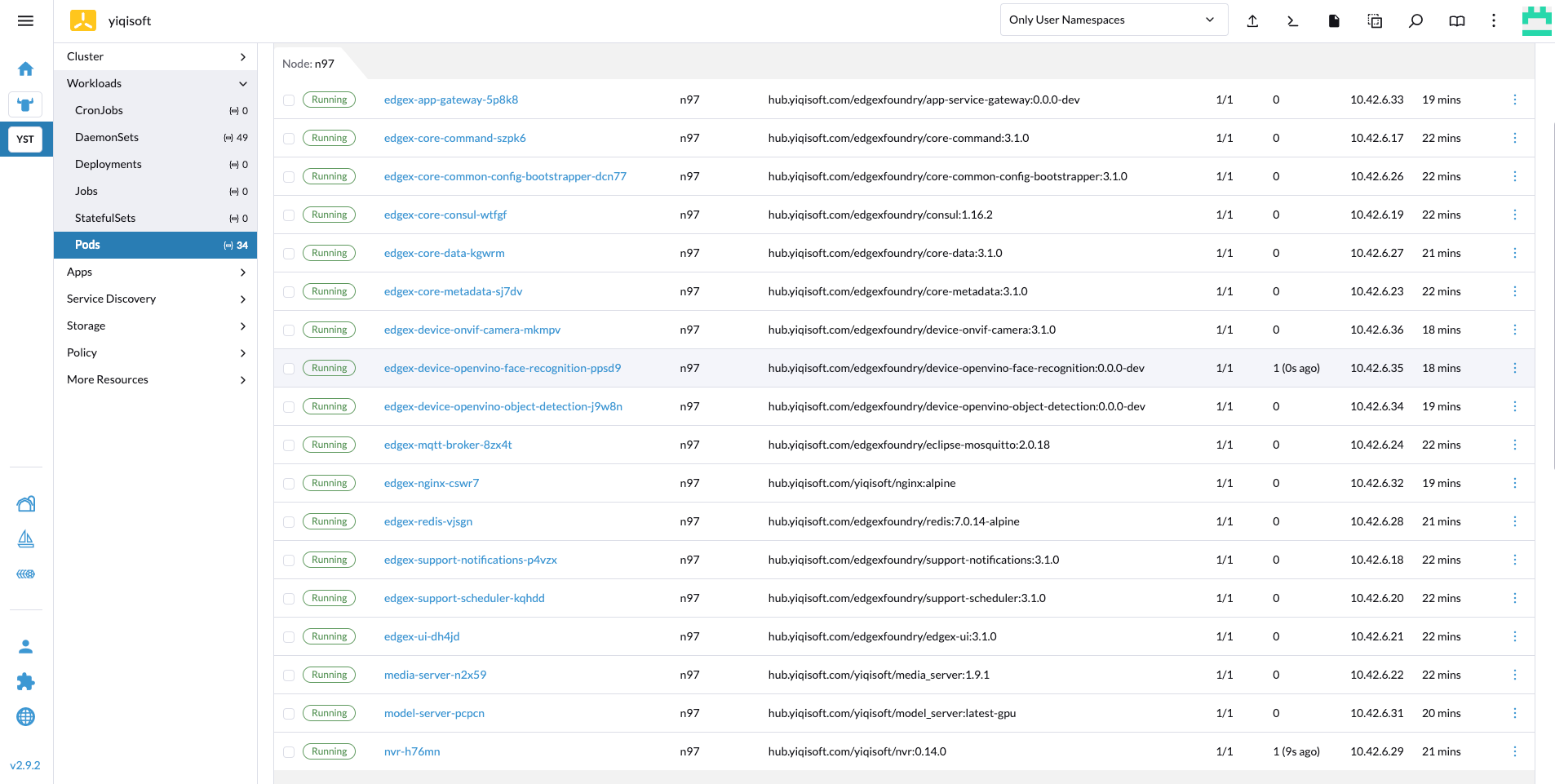

EdgeX Foundry Base

- 部署基本框架结果

kubectl get pods -n worker161 NAME READY STATUS RESTARTS AGE edgex-core-command-tspw7 1/1 Running 0 5m41s edgex-core-common-config-bootstrapper-92q25 1/1 Running 0 5m25s edgex-core-consul-8lqxz 1/1 Running 0 6m4s edgex-core-data-nv26d 1/1 Running 0 6m23s edgex-core-metadata-l6c49 1/1 Running 0 5m3s edgex-device-onvif-camera-5tt4w 1/1 Running 0 3m26s edgex-mqtt-broker-j2jdr 1/1 Running 0 81s edgex-redis-rf4v7 1/1 Running 0 4m50s edgex-support-notifications-ws6l5 1/1 Running 0 4m28s edgex-support-scheduler-drx2p 1/1 Running 0 3m56s edgex-ui-v55vm 1/1 Running 0 3m45s

部署辅助应用:流媒体,推理服务器,NVR

- 部署辅助应用

业务 pod 之间有依赖关系,需等待其他 pod 起来才能工作,会不断的重试kubectl get pods -n worker161 |grep -v edgex结果

NAME READY STATUS RESTARTS AGE media-server-wtjxk 1/1 Running 1 (5m29s ago) 13m model-server-9fpb2 1/1 Running 0 89s nvr-gqlst 1/1 Running 2 (5m29s ago) 11m

部署 OpenVINO AI 应用

- 部署基于 OpenVINO 推理框架的 AI 应用 pod

kubectl get pods -n worker161 |grep openvino结果

NAME READY STATUS RESTARTS AGE edgex-device-openvino-face-recognition-bgch4 1/1 Running 0 5m43s edgex-device-openvino-object-detection-tbvwk 1/1 Running 0 7m21s

总结

- 通过本部署方案,可以管理基于 EdgeX 的边缘计算方案,EdgeX 具备边缘自治,即使脱离 K3S 集群,也照样能正常工作。

- 同时,此方案可以管理成千上万个边缘设备,之间互不干扰,适合大型项目部署。

- 云端配置相对较为复杂,但是边缘端就轻松很多,只需要手动注册到 K3S 集群,其他都可以通过 K8S API 进行部署和管理。

- 对比 K8S 而言,K3S 非常适合在边缘端工作,占用资源少,管理较为方便。

- 其实,最终结果就是:K3S 适合管理边缘容器分发。